Result description

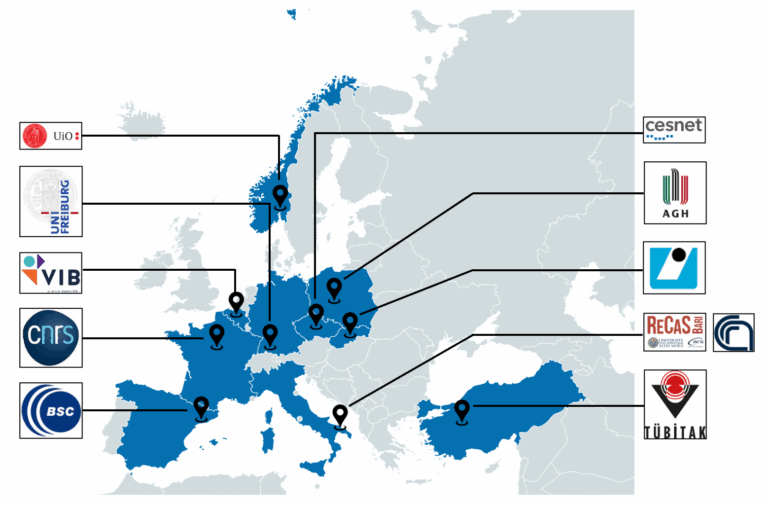

Pulsar Network is a federated, production-ready (TRL-9) job-execution layer that lets public Galaxy servers and TES-compatible workflow systems run tasks across national HPC, cloud and institutional clusters via site-hosted “Pulsar endpoints.” It adds a secure, behind-firewall pull model with automated data staging, while an Open Infrastructure (Terraform+Ansible) makes endpoint deployment and upkeep repeatable; tools and reference data are shared via CVMFS, and reliability is continuously checked by SABER and visualised with Galaxy Job Radar. The network currently links ~13 endpoints in ~10 countries to six national Galaxy servers plus usegalaxy.eu. Interoperability is ensured by a GA4GH TES interface and WfExS integration, and EuroScienceGateway adds Bring-Your-Own-Compute/Storage and meta-scheduling to balance load across providers. The result reduces fragmentation, boosts reproducibility, and makes European compute capacity more accessible to researchers while giving RIs a low-friction path to expose resources.

Addressing target audiences and expressing needs

- Use of research Infrastructure

- Collaboration

Seeking RTO and university compute centres to join/host Pulsar execution endpoints.

Needs: run a Pulsar node on your scheduler (Slurm/HTCondor/PBS) with outbound HTTPS, container support (Apptainer/Singularity or Docker) and POSIX/S3 storage; offer a small pilot allocation (~50k core-hours/month, optional GPUs, 5–10 TB); integrate AAI (eduGAIN/OIDC) and lightweight accounting; expose GA4GH TES where feasible; nominate a technical PoC; collaborate on reliability, connectors, and training.

- Research and Technology Organisations

- Academia/ Universities

R&D, Technology and Innovation aspects

Current stage: Production deployment (TRL-9). Pulsar Network is operating across multiple European sites with routine use by public Galaxy servers.

Who we’re targeting for support: Public or private funding institutions (EU/national RI programmes) and other actors who can help us fulfil our potential (HPC centres, cloud providers via in-kind compute/credits and co-development).

Pulsar Network scales horizontally by onboarding new site-hosted endpoints that can be added/removed and even shared across multiple Galaxy servers. Automated “Open Infrastructure” (Terraform+Ansible) cuts time-to-production, while a behind-firewall pull model with RabbitMQ, CVMFS for tools/reference data, and GA4GH TES interoperability keep quality high as demand grows and technology stacks vary. Cloud and HPC are both supported and already validated across multiple countries.

Operational efficiency improves with scale: a small core SRE team plus local POCs runs a continuously monitored federation (SABER) with meta-scheduling that balances load using live endpoint metrics; BYOC/BYOS expands capacity at marginal cost by reusing existing compute/storage. We target public/private funding institutions and other actors (HPC centres, clouds) for in-kind capacity and core ops support—not equity investment.

Each site deploys a standard “Pulsar endpoint” using open Infrastructure-as-Code (Terraform+Ansible) and a documented reference architecture. Minimal prerequisites (Linux, Slurm/HTCondor/PBS, outbound HTTPS, Apptainer/Docker, POSIX/S3) keep variability low. A behind-firewall pull model and GA4GH TES interface expose a uniform service. CVMFS and containers deliver the same signed, versioned tools/data everywhere. An onboarding kit (playbooks, conformance tests, monitoring and SLOs) verifies each deployment, with MoU/policy templates aligning ops and security. The service can be replicated across institutions/countries to deliver the same quality at scale through Galaxy.

Pulsar Network applies a sustainable business model. Stakeholder value: reliable, data-local execution for researchers; safe capacity exposure with full policy/quota control for RTOs & universities; measurable reliability and cross-border reuse of existing investments for funders. Creation & delivery: open-source stack, GA4GH-compatible interfaces, Infrastructure-as-Code for reproducible ops, behind-firewall pull model, continuous monitoring/training, transparent SLOs. Value capture: lightweight core ops via public RI programmes; in-kind compute/credits; project co-funding for new connectors; optional support/training packages. Natural/social/economic capital: data-locality reduces transfers; re-use existing sites over new build-outs; roadmap for cost/energy/carbon-aware scheduling; open governance and community skills that outlast grants.

- Europe

- Global

Result submitted to Horizon Results Platform by ALBERT-LUDWIGS-UNIVERSITAET FREIBURG