Result description

Matrix multiplication computation consumes huge amount of resources: computing time and energy, primarily in AI applications. The industry has recognized the need for faster and more energy-efficient matrix multiplication with state-of-the-art solutions in software (e.g., NVIDIA’s CUDA for GPU) and hardware (e.g., Google’s TPU). Unfortunately, all present solutions employ a wasteful cubic-time algorithm.

We have developed speedup for matrix multiplication, that can reduce the huge compute costs of Gen-AI, minimize latency, reduce energy footprint, and shorten time to market of foundation models companies.

Addressing target audiences and expressing needs

- Business Angels

- Venture Capital

Initial funding and industry collaboration

- Research and Technology Organisations

- Academia/ Universities

- Private Investors

R&D, Technology and Innovation aspects

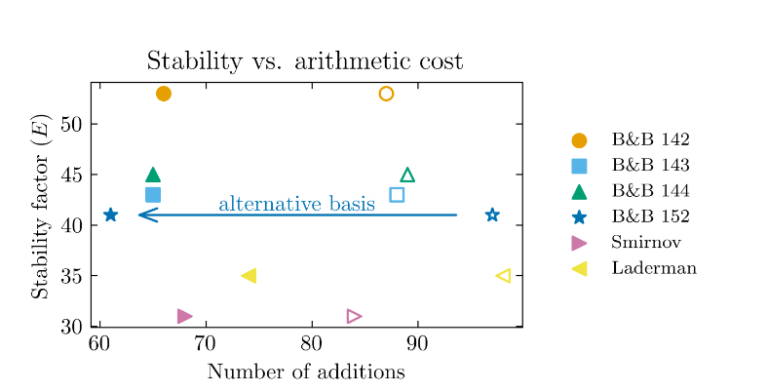

We have demonstrated that the alternate base fast matrix multiplication algorithms can be implemented on CPU and GPU hardware, and outperform vendor-tuned libraries.

The next stages are to measure the actual speedups of AI workloads in customer defined scenarios, as well as measure the energy saving impact. In addition customer inputs regarding research and development directions need to be compiled into programs, and eventually serve as a basis for useful products.

The solution can be scaled across multiple AI GPU types and is applicable to a very wide AI model families such as LLMs, Vision Transformers, and Robotics 3D algorithms such as object manipulation and navigation, for both data centers and edge AI use cases.

The result may apply to all GPUs, CPUs performing AI workloads, and all Gen AI models.

The innovative technology is protected by patents and may be implementd in software. In the more distant future, it will also be implemented on hardware.